Model Context Protocol (MCP): The Universal Connector for AI Agents

March 24, 2025

AI AgentToolsMCP

🧠 Model Context Protocol (MCP) The Universal Connector for AI Agents

The Model Context Protocol (MCP) from Anthropic is an open standard for connecting AI models to tools, APIs, and data sources in a structured and secure way. It enables developers to build powerful AI agents that can interact with external systems such as databases, services, or internal company tools without custom, hardcoded integrations.

🔍 What MCP Does

Think of MCP as a universal adapter for AI agents. It defines how external tools (like APIs, databases, file systems, etc.) can expose their functionality to a language model (LLM) using a standard JSON-RPC 2.0 based interface.

🧠 Why It Matters

MCP enables

Tool use by AI agents: Agents can make API calls, look up documents, take actions, and more.Extensibility: Tools can be registered dynamically, and models can reason over which tools to use and when.Interoperability: One MCP compliant tool server can be used across any system that supports MCP.

🧱 What it´s not

- A framework for building agents

- A fundamental change to how agents work

- A way to code agents

🔌 MCP core Concepts

The three Components

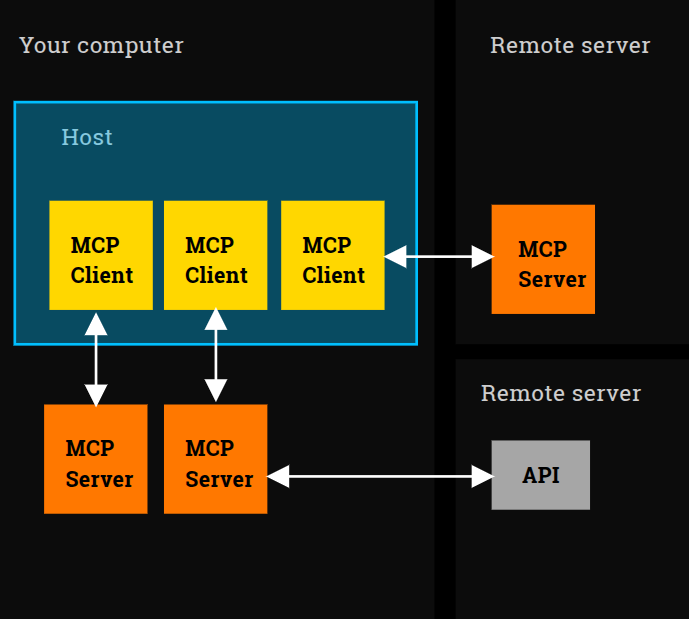

Hostis an LLM app like claude or agent architectureMCP Clientlives inside Host and connects 1:1 MCP ServerMCP Serverprovides tools, context and prompts

🛠️ Architecture

📡 Transport mechanisms

Stdiospawns a process and communicates via standard input/outputSSEuses HTTPS connections with streaming

MCP Marketplace

- 🔗 mcp.so

- 🔗 glama.ai

- 🔗 smithery.ai

- 🔗 huggingface.co

🔐 Security Model

MCP supports

- Permission scoped tool access

- Per user or per session authorization

- Audit logging for traceability

🌐 Real World Use Cases

- 🔎 Document search & retrieval

- 💬 Customer service AI agents accessing ticket systems

- 📊 Data analysis via SQL connectors

- 🛠️ DevOps automation using internal tools

📦 Code Example

1from dotenv import load_dotenv

2from agents import Agent, Runner, trace

3from agents.mcp import MCPServerStdio

4import os

5import asyncio

6

7

8load_dotenv(override=True)

9

10#Let's use MCP in OpenAI Agents SDK

11# 1.Create a Client

12# 2.Have it spawn a server

13# 3.Collect the tools that the server can use

14

15async def main():

16 # Create sandbox directory if it doesn't exist

17 sandbox_path = os.path.abspath(os.path.join(os.getcwd(), "sandbox"))

18 os.makedirs(sandbox_path, exist_ok=True)

19

20 # Set up filesystem server

21 files_params = {"command": "npx", "args": ["-y", "@modelcontextprotocol/server-filesystem", sandbox_path]}

22

23 async with MCPServerStdio(params=files_params) as server:

24 file_tools = await server.list_tools()

25 print("\nAvailable filesystem tools:")

26 for tool in file_tools:

27 print(f"{tool.name}: {tool.description.replace('\n', ' ')}")

28

29 # Simple test with the Agent

30 instructions = """

31 You can read and write files in the sandbox directory.

32 When writing files, make sure to use markdown format.

33 """

34

35 agent = Agent(

36 name="file_handler",

37 instructions=instructions,

38 model="gpt-4o-mini",

39 mcp_servers=[server]

40 )

41

42 with trace("write_test"):

43 result = await Runner.run(agent, "Create a simple test.md file with 'Hello World!' in it")

44 print("

45Agent result:", result.final_output)

46

47if __name__ == "__main__":

48 asyncio.run(main())📚 Learn More

👉 Documentation: Anthropic’s official documentation on MCP